Spatial Computing

Towards natural interactions

Have you ever used your elbow to navigate your smartphone? Maybe to give it a try?

Most of the time, we use our thumb or index finger to interact with everyday objects and devices. The rest of our body is barely part of these interactions. This results in unnatural interactions. For example, why do I have to press a button with my thumb on a mobile phone to start a song on a music speaker? Why can’t I look or point at the speaker to play the music?

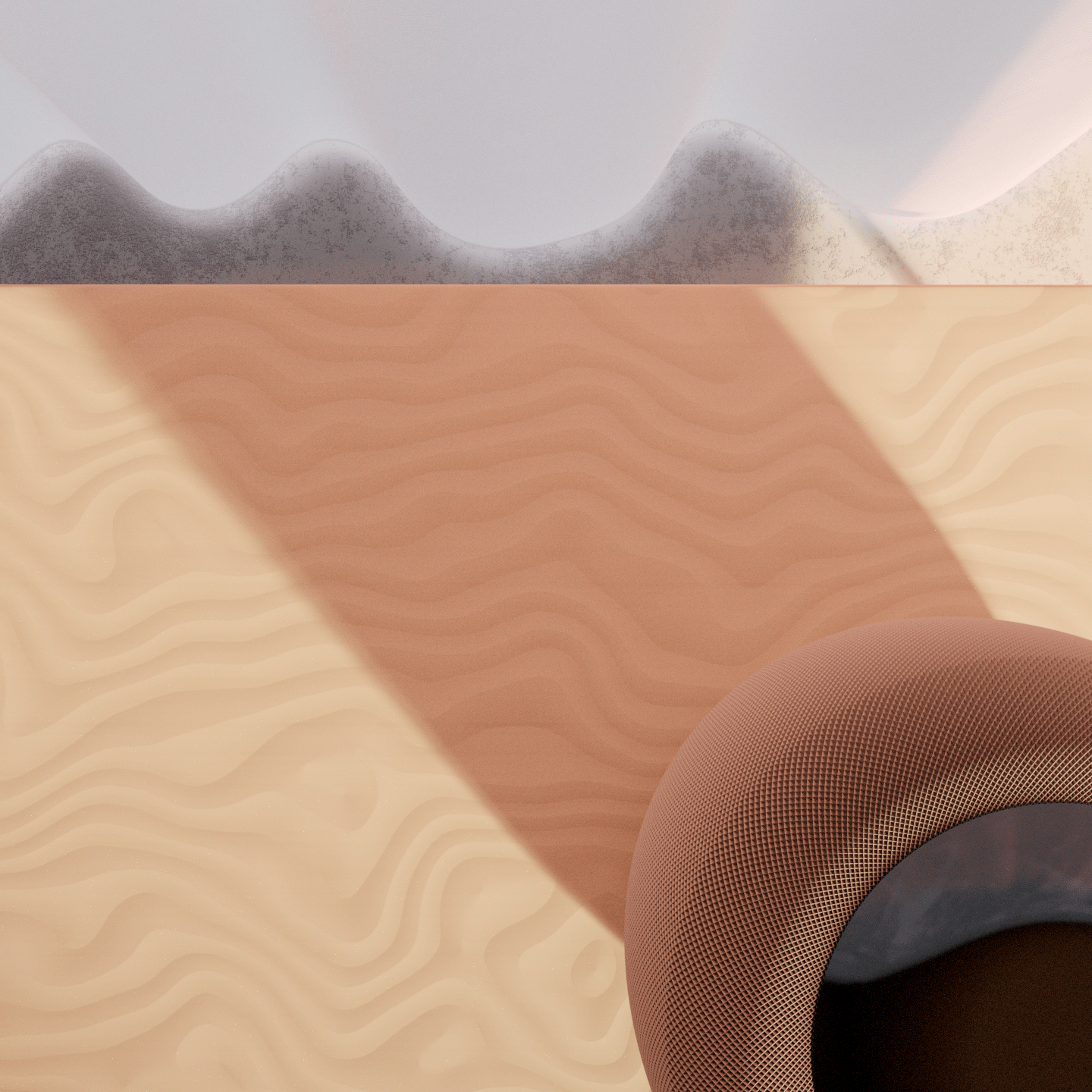

How to model a hand that looks semi-realistic?

We modelled six versions of the hand until we came up with a decent one. So keep going!

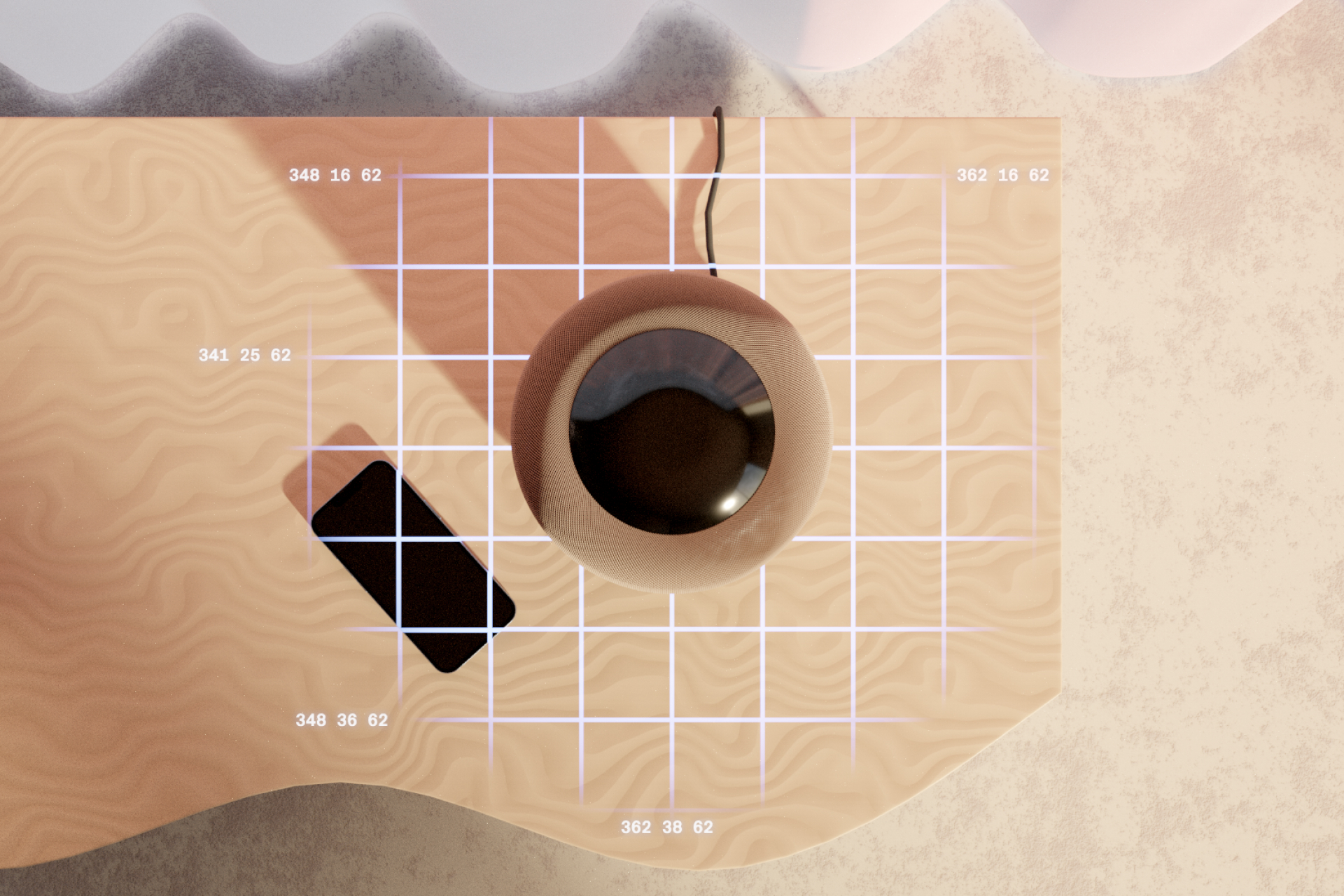

How to show an invisible process?

We used a laser-like scan to visualize that the speaker recognizes its environment.

What if full-body or gesture interactions were the new normal? What would interfaces look and feel?

Fellow student Fabi Lou and I visualized this concept with three animations based on the interaction with a music speaker. The animations illustrate how everyday objects learn to »see« their environment, how our hands function as interface, and how we might interact with a music speaker in some years.

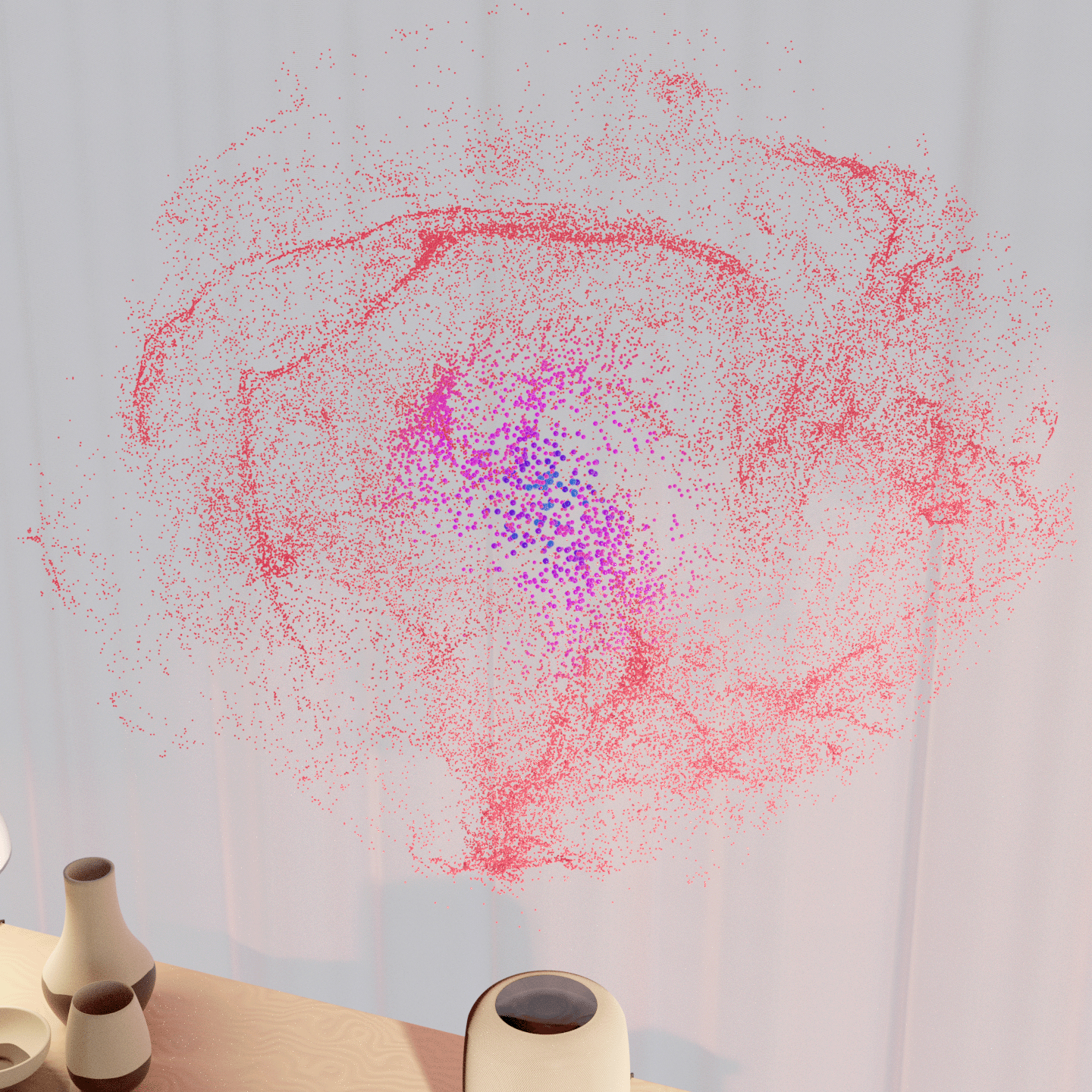

How to visualize sound for a mainly visual outcome?

We used a particle system that reacts to the music to make it understandable even if people turn off their audio.

Design: Fabi Lou Sax and Felix Schultz

Supervisied by Prof. Ralph Ammer

Tools we used: Blender, After Effects, Houdini